Vinegar Tom Video Projection Mapping

A collaboration between my Adv Interactivity class and the Theatre Program’s production of Vinegar Tom. For this production my students created their own video projection mapping software to project their videos onto 5 different location on the stage.

FIRE DREAMS

Description:

This project takes as its premise that when we walk through cities, our bodies enter the dreams of other people who’ve walked there. New media artist Matt Roberts and poet Terri Witek map the city by floating various “dreamers” over interesting metropolitan spaces. City wanderers then follow this walkable dream map via an augmented reality phone app. Along the way, they are offered chances to see a dream, hear a dream, text a dream of their own, send a photo, and perform various other transmittable acts. These become part of the living dream map of the city.

How it works:

This project uses Layar a free augmented reality application for mobile devices. Participants can download the Layar app and follow the Fire Dreams map, which is designed as an international project adaptable to any urban space.

Project Website:

http://thefiredreams.com

Last Week’s Tumblr

a few images from last weeks sketch book posted on tumblr http://ibmattroberts.tumblr.com/

10 Projects Using Real-Time Data

My work Waves was listed in this article about art works that utilize real-time data. Make sure you check out the inspiring works listed in the article. I am honored to be amongst amongst such great works!

http://postscapes.com/networked-art-10-projects-using-real-time-data

FILE Festival Sao Paulo Brazil

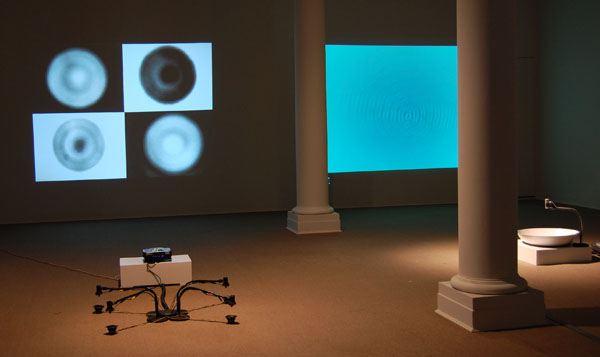

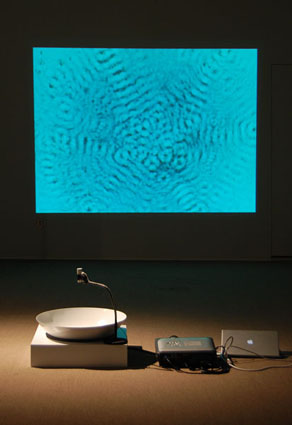

I recently attended the FILE festival in Sao Paulo, Brazil. There I exhibited my work Waves and participated in the FILE symposium. I took a few shots of my work with my iphone cam so the quality is not that great but it will give you a sense of the installation. Honored to be part of such a great festival and to have exhibited with some fantastic artists.

Waves

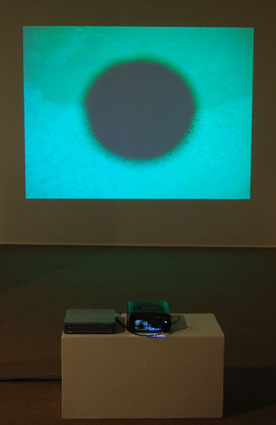

This artwork responds to the current size and timing of the waves of the closet ocean of its current location. Every half hour the most current data from the closet ocean buoy station is downloaded. Custom software uses the current wave height and dominant wave period data from the buoy and transforms that information into a low frequency sound wave. As the size and timing of the waves in the ocean change so does the frequency of the sound waves produced by the software. These sound waves shake a bowl of water sitting on top of a speaker. This shaking produces wave patterns in the bowl that are captured by a video camera modified by the software and projected onto a wall. As the waves in the ocean change size and frequency the waves in the bowl will also change. This results in continuous variations of the shapes and patterns that one sees and hears which also reflects the constant changing conditions of the ocean.

Waves can be shown in various forms, below are examples of works driven by real-time wave data

Wind

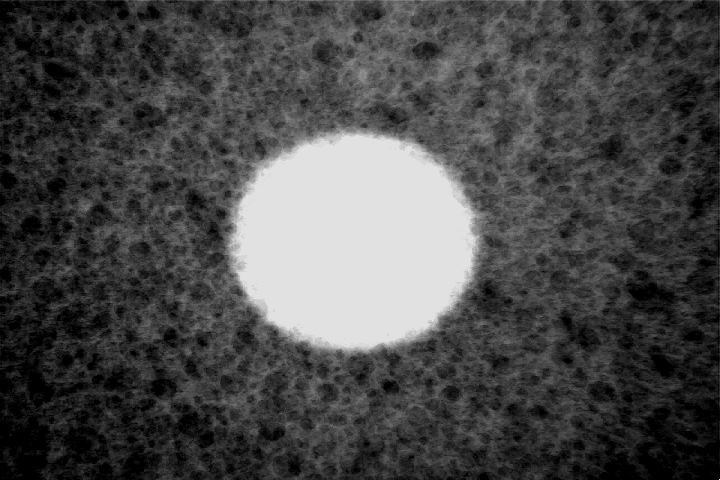

Wind is a continuation of my interest in visualization of real-time weather data. This work responds to real-time wind data from the National Weather Service to create a moving abstract image.

Interactivity and Art Finals 2010

Final projects from my class DIGA 231 Interactivity and Art. This is an introductory class where students learn how to program their own interactive software using MAX/MSP and how to use Arduinos. Students also learn how to use a variety of switches and sensors such as distance, light, pressure, knock, temperature, RFID and heart rate sensors.

Wind Sketches

Some test screen shots from a new piece I am working on that uses real-time wind data to generate new images

DAF/404 Taipei Taiwan

In November 2010 I participated in the Digital Arts Festival Taipei 2010. I was invited there by the 404 Festival and I presented my work Every Step. For the opening weekend I met with participants and outfitted them with cameras to create animations, you can see all the animations created by participants here.

Waves Walks

I have a solo show coming up at the Duncan Gallery of Art at Stetson University. The show, entitled Waves Walks, features two bodies of work. A series of works that uses real-time wave buoy data as a means for generating sounds and images and a series of work based on walking. The opening is August 27 6:00-8:00pm and runs until the 28th of October. Below are some in progress installation shots.

The two pieces pictured below use real-time wave height and period data from wave buoys off the coast of Florida. Using custom software the buoy data is translated into low frequency sound waves. The sound waves shake objects such as bowls of water, these objects respond to the sound waves by creating abstract patterns.

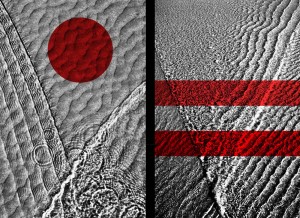

I created software that abstracts images I took while swimming in the ocean. Each projection has a design element which changes size according to the current size of the waves off the coast of Florida.

EMP: Electronic Mobile Performance

EMP: Electronic Mobile Performance is a collaborative, multimedia project involving faculty and students from Stetson University’s Digital Arts program. The group’s primary mission is to explore collaborative artistic production using new technologies and to find new ways of presenting art outside of traditional venues. EMP is directed and founded by Matt Roberts, Associate Professor of Digital Arts at Stetson University. http://electronicmobileperformance.com

An earlier incarnation of EMP was a group created and directed by Matt Roberts know as MPG: Mobile Performance Group. MPG presented a number of site-specific performances at festivals and conferences throughout the country, including ICMC: International Computer Music Conference, Conflux, and ISEA: Inter-Society for the Electronic Arts. For more information about MPG please see the archived site.

MIRROR PAL CD RELEASE

Last summer I worked with students, Hogan Birney, Sean Kinberger and David Plakon on creating an interactive live audio/visual performance for Mirror Pal’s CD release party. The students asked me to help them develop a multimedia performance for the release party and I was more than happy to help. We developed a live multiple camera setup for the stage performance of the band which allowed them to mix live stage shots, prerecorded video clips and realtime video manipulation. To do this we modified affordable security cameras to be easily placed on stage and created a mixing station to easily switch between the cameras. We also created our own software to mix the live footage with prerecorded clips and add effects in realtime. Audience members could also submit text messages which were mixed with the live images and projected during the performance. We also created an interactive photo booth that audience members could sit inside and create short animation that were used during the performance of the band. The project was very ambitious for three students but they did an outstanding job. Here is some video they created to document the event.

New Interface for MPG @ the Intermedia Festival

MPG: Mobile Performance Group was invited to perform at the Intermedia Festival hosted by Indiana University Purdue University Indianapolis. I am working on an interface for the iphone/itouch, using the OSC based mrmr app. I developed an interface that allows the public to control the manipulation of live video and send text messages which becomes part of the live video projection. Users are be able to do things such as mix video, choose video clips, apply effects, and use the iphone’s accelerometer to rotate and position the text and image. The festival was a blast and I will post some documentation soon.

CITY CENTERED

Last Month I presented my project Every Step at City Centered – A Festival of Locative Media and Urban Community The festival was located in the Tenderloin District of San Francisco and featured some great artist. A great event in an incredible city, I miss it already!

Collaborative Multimedia Performance Class

Below are a few videos from the final performance of a class I taught called Collaborative Multimedia Performance. In this class students learn how to collaborate with students from different majors, Music, Art, Computer Science. Students were taught a variety of techniques for live performance using electronics and software.

For this assignment students used contact mics to turn an object into an instrument. They created their own contact mics and attached them to the table and bottles to create a percussive instrument. The microphones were run through some guitar pedal effects and amplified. Students, David Plakon, Sean Kinberger and Zeb Long.

Student Ian Guthrie performs under the name Benny Loco and Uncle Abuelito. For this performance Ian teamed up with student Jana Fisher to create a visual accompaniment for his music. Jana learned how to create her own VJ software that allowed her to manipulate clips from the Twilight Zone to accompany his music. Jana built her VJ software using the Max/Jitter programing environment.

Interactivity and Art Finals 2009

Final projects from my DIGA 231 Interactivity and Art class. Students in this class learn how to program their own interactive software using MAX/MSP and how to use Arduino boards to create a link between the physical and digital worlds. Students also learn how to use a variety of switches and sensors such as distance, light, pressure, knock, temperature, RFID and heart rate sensors.

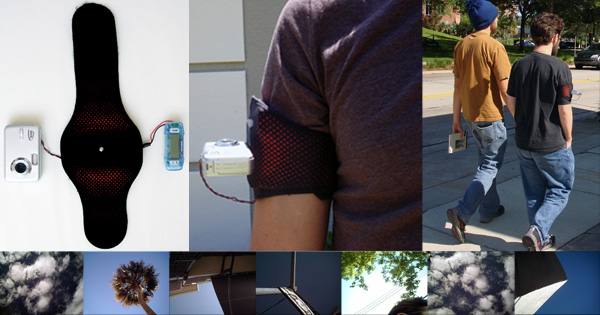

EVERY STEP

Every Step allows a participant to create a short experimental animation while they walk. Each participant is given an armband with a mounted camera and pedometer. The pedometer is mounted inside the armband and is connected to the camera. The camera is mounted on the armband and points towards the sky. The pedometer acts as a trigger for the camera and an image of whatever is above the participant is taken every time a step is made.

To create an animation the participant simply puts on the armband and takes a walk wherever he or she would like to go. When the participant returns from the walk the images are transferred from the camera’s memory and loaded into a custom software program. The software program uses the images to create a frame-by-frame animation and to create a soundtrack for the animation. When the program completes the animation, a DVD is made and given to the participant.

Every Step reviewed by We Make Money not Art “The techy work that really charmed me by its simplicity, poetry and melodies was Every Step.”

CYCLES FOR WANDERING

Cycles for wandering is a project that mixes a pleasurable bike ride, real-time image manipulation, locative media and user participation to create a short experimental video. To create a video a participant simply takes a five to ten minute bike ride. The bike is equipped with a GPS and video camera that records images and movements of the rider’s trip. A computer, mounted on the bike, uses the information gathered from the GPS to make decisions on how to manipulate the images taken by the camera. When the rider returns from the trip a DVD of the video made is created for them.

Recipient of Transitio Award, Transitio_MX 2007, Mexico City, Mexico

Cycles for Wandering Featured in Wired

Cycles for Wandering Featured in New York Times and Wall Street Journal

TRANSFERS

Transfers is a project exploring real-time generation of art and user participation in a mobile environment. Transfers allows a passenger of a taxi to generate a unique piece of art by giving the taxi driver directions. As the taxi moves through the city the passenger will experience a real-time manipulation of live exterior video and audio taken from a camera and microphone mounted in the taxi. The taxi is also equipped with a GPS that feeds an onboard computer data such as speed and direction. This computer is running custom audio/video manipulation software and uses the GPS data to make decisions about how the live video/audio feed is manipulated and seen by the passenger. The manipulations of the live feed is displayed on two LCD screens and heard through the cars stereo system. As the user tells the driver where to go the passenger becomes both performer and viewer as they experience a unique piece of art generated by their decisions. The software also records this performance and at the end of the drive the passenger receives a CD with a QuickTime movie file of his or her recorded performance.

Supertudo, TVE Brasil, Rio de Janeiro 2007